Splunk

Splunk is a company that produces software for searching, monitoring, and analyzing data. Commonly it is a used as a repository to send all logging data where it can be analysed, correlated and graphed in real-time. Analysts usually interact with Splunk via a web browser, and it runs on port 8000. The goal of Splunk is to work on any type of data, so unlike a tool like Wireshark, where the platform needs a specific format, Splunk can work with almost any text-based data type. One of the goals is that it may be possible to have a better understanding of a scenario if you receive logs from multiple devices. In a networking context, this could be firewall logs, server logs, authentication logs et cetera.

Contents

Getting Splunk

While Splunk is a commercial product, there are free versions available. First, we will install Splunk. Go to https://www.splunk.com/. Click on Free Splunk and register an account. Click on download free Splunk and then pick your OS. If you are in the labs, click Linux and then the .deb. Download and install the .deb.

Note, you are free to do this on the lab machines or on your own mobile Linux device.

Installing Splunk

You should be able to use the GUI to install .deb packages on Ubuntu. If you can't use the GUI, then get a terminal and cd to the directory where the .deb is located then:

sudo dpkg -i splunk*

Then navigate to:

cd /opt/splunk/bin/

And then run:

./splunk enable boot-start

You will be asked to agree to a licence and add a username and password. Reboot your machine and login again, Splunk will now start at boot.

Using Splunk

You should now be able to access your Splunk Web interface at http://127.0.0.1:8000/. Use the username and password that you created when you accepted the licence agreement. In this lab, you will review a range of different data sets found below and try to answer questions based on them.

To upload a file go to http://127.0.0.1:8000/. There should be a large button that says "Add Data". On the next page, you should find the large "Upload" button. After this, you will be asked to select the source. Click Select File and select the file from your system. Below you can see that I have added access.log from /var/log/access.log, but if you dont have this you could probably add /var/log/auth.log For simplicity, accept the defaults by clicking next until you see the green "Start Searching" button.

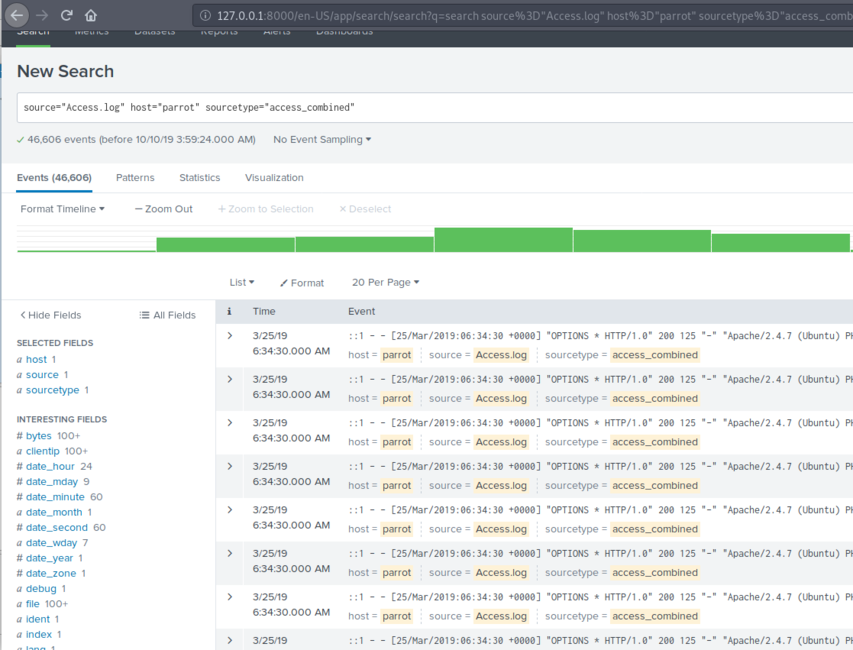

On this interface, which is shown in the diagram to the right, I want to draw your attention to 3 elements. Firstly, you can use the search bar at the top to search for text matches. Secondly, you can use the timeline visualised with green bars to filter on a single day/week/month. Finally, you can use the Interesting field match on the left-hand side. Just clicking on these elements will provide results with percentages.

Note that each time you click on an element, it will add this to the search filter in the bar at the top. Be careful that, after each search, you reset the values changed in the search bar as well as the search period, which is found just to the right of the search bar. I usually reset the search period, under Presets to "All time" after each search.

Data Set 1

This dataset is one week of access data from intsec-wiki.murdoch.edu.au. The IP addresses and timestamps are real but all traces of student information that link these two have been removed. You can get the file here. You should then unzip it and then upload it to Splunk. You should also open the file in a text editor, just to get a feel for the contents.

Once it is in Splunk, can you answer the following questions?

- What is the most common user-agent string in the data set? If you have never heard of user-agent strings before, please see: https://www.whatismybrowser.com/detect/what-is-my-user-agent

- What are the 3 most common IP addresses accessing the server - ignore any localhost type addresses.

- On which day of the week is the server most commonly accessed?

- On which day of the week is the server least commonly accessed?

- Look at the Interesting field date_hour. Does it look correct? If not, do you think the clock was incorrectly set on the server? Where do you think intsec-wiki exists? So this server no longer exists but lets us csn.murdoch.edu.au as a proxy replacement. Is there a timezone mismatch between the users and the logs? Hint:

- What is the Ip address: nslookup csn.murdoch.edu.au

- Can you geolocate it: https://tools.keycdn.com/

- Remember that you are looking at Apache server logs. The more you know about HTTP, the better you will be able to search the data. Have a read of HTTP Status codes: https://en.wikipedia.org/wiki/List_of_HTTP_status_codes

- Can you work out how to search for 404 errors?

- What happens if you want to know about all 400 level errors? Can you search on "40* "

- 400 level codes are client level errors, what about server-level errors? Can we search on "50* "

- Find a peak in the timeline graph. If you double click on this bar you will be filtering on just this day.

- Click through the many pages of logs that match your search criteria on this day.

- If you find any IP address doing anything amiss, you can geolocate it: https://tools.keycdn.com/

Data Set 2

Remember earlier I stated that Splunk was a generic analysis tool, this is my attempt to show you how this works. It doesn't need to be familiar with any specific file type, it is generic and can work with any text. The dataset here contains a messy assortment of revisions to the following Wikipedia page https://en.wikipedia.org/wiki/Muhammad_Ali. Load this file into Splunk and explore the data.

- Can you find the giant peak in revisions? What happened during this month?

- Refine your search down to the last 12 months in the logs. During this period, what was the most and the least busy day of the week for edits?

Cleaning up Splunk

To clean up the logs that you have imported, follow the steps below. If you are using Splunk to analyse independent events or for a test or CTF. It might be useful to purge all existing data.

cd /opt/splunk/bin

Then

sudo ./splunk stop

Then

sudo ./splunk clean eventdata

Then

sudo ./splunk start

You would want to do this prior to a functional skills test involving Splunk as you don't want old data sets contaminating new ones you might upload.

Using the Splunk Educate platform

Splunk has a free education platform, which is significantly more detailed than the taster in this lab. If you want to know more, visit https://www.splunk.com/en_us/training/courses/splunk-fundamentals-1.html Under E-Learning, click register. You have to agree to terms and conditions then checkout for 0.00 USD. It will ask you to register, so create a free account.

After you have created your account, you may need to checkout with the Splunk fundamentals course again. Once you have access to the course, you may want to click on course overview in the top right. You can start on module 4 "Getting Data In"